yarn.nodemanager.vmem-check-enabled false

提交任务后,yarn报错,我们看一下错误内容

2018-09-04 01:58:44,001 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl: Memory usage of ProcessTree 108769 for container-id container_1535979586125_0003_01_000001: 24.6 MB of 540 MB physical memory used; 2.5 GB of 1.1 GB virtual memory used2018-09-04 01:58:44,001 WARN org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl: Process tree for container: container_1535979586125_0003_01_000001 running over twice the configured limit. Limit=1189085184, current usage = 26405068802018-09-04 01:58:44,001 WARN org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl: Container [pid=108769,containerID=container_1535979586125_0003_01_000001] is running beyond virtual memory limits. Current usage: 24.6 MB of 540 MB physical memory used; 2.5 GB of 1.1 GB virtual memory used. Killing container.Dump of the process-tree for container_1535979586125_0003_01_000001 : |- PID PPID PGRPID SESSID CMD_NAME USER_MODE_TIME(MILLIS) SYSTEM_TIME(MILLIS) VMEM_USAGE(BYTES) RSSMEM_USAGE(PAGES) FULL_CMD_LINE |- 108833 108769 108769 108769 (java) 39 15 2524606464 5947 /root/jdk1.8.0_181/bin/java -Xmx461m -Dlog.file=/root/yarn_study/hadoop-2.2.0/logs/userlogs/application_1535979586125_0003/container_1535979586125_0003_01_000001/jobmanager.log -Dlog4j.configuration=file:log4j.properties org.apache.flink.yarn.YarnApplicationMasterRunner |- 108769 105708 108769 108769 (bash) 1 1 115900416 351 /bin/bash -c /root/jdk1.8.0_181/bin/java -Xmx461m -Dlog.file=/root/yarn_study/hadoop-2.2.0/logs/userlogs/application_1535979586125_0003/container_1535979586125_0003_01_000001/jobmanager.log -Dlog4j.configuration=file:log4j.properties org.apache.flink.yarn.YarnApplicationMasterRunner 1> /root/yarn_study/hadoop-2.2.0/logs/userlogs/application_1535979586125_0003/container_1535979586125_0003_01_000001/jobmanager.out 2> /root/yarn_study/hadoop-2.2.0/logs/userlogs/application_1535979586125_0003/container_1535979586125_0003_01_000001/jobmanager.err 2018-09-04 01:58:44,002 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl: Removed ProcessTree with root 1087692018-09-04 01:58:44,004 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.Container: Container container_1535979586125_0003_01_000001 transitioned from RUNNING to KILLING2018-09-04 01:58:44,005 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch: Cleaning up container container_1535979586125_0003_01_0000012018-09-04 01:58:45,827 INFO org.apache.hadoop.yarn.server.nodemanager.NodeStatusUpdaterImpl: Sending out status for container: container_id { app_attempt_id { application_id { id: 3 cluster_timestamp: 1535979586125 } attemptId: 1 } id: 1 } state: C_RUNNING diagnostics: "Container [pid=108769,containerID=container_1535979586125_0003_01_000001] is running beyond virtual memory limits. Current usage: 24.6 MB of 540 MB physical memory used; 2.5 GB of 1.1 GB virtual memory used. Killing container.\nDump of the process-tree for container_1535979586125_0003_01_000001 :\n\t|- PID PPID PGRPID SESSID CMD_NAME USER_MODE_TIME(MILLIS) SYSTEM_TIME(MILLIS) VMEM_USAGE(BYTES) RSSMEM_USAGE(PAGES) FULL_CMD_LINE\n\t|- 108833 108769 108769 108769 (java) 39 15 2524606464 5947 /root/jdk1.8.0_181/bin/java -Xmx461m -Dlog.file=/root/yarn_study/hadoop-2.2.0/logs/userlogs/application_1535979586125_0003/container_1535979586125_0003_01_000001/jobmanager.log -Dlog4j.configuration=file:log4j.properties org.apache.flink.yarn.YarnApplicationMasterRunner \n\t|- 108769 105708 108769 108769 (bash) 1 1 115900416 351 /bin/bash -c /root/jdk1.8.0_181/bin/java -Xmx461m -Dlog.file=/root/yarn_study/hadoop-2.2.0/logs/userlogs/application_1535979586125_0003/container_1535979586125_0003_01_000001/jobmanager.log -Dlog4j.configuration=file:log4j.properties org.apache.flink.yarn.YarnApplicationMasterRunner 1> /root/yarn_study/hadoop-2.2.0/logs/userlogs/application_1535979586125_0003/container_1535979586125_0003_01_000001/jobmanager.out 2> /root/yarn_study/hadoop-2.2.0/logs/userlogs/application_1535979586125_0003/container_1535979586125_0003_01_000001/jobmanager.err \n\n" exit_status: -10002018-09-04 01:58:46,897 INFO org.apache.hadoop.yarn.server.nodemanager.NodeStatusUpdaterImpl: Sending out status for container: container_id { app_attempt_id { application_id { id: 3 cluster_timestamp: 1535979586125 } attemptId: 1 } id: 1 } state: C_RUNNING diagnostics: "Container [pid=108769,containerID=container_1535979586125_0003_01_000001] is running beyond virtual memory limits. Current usage: 24.6 MB of 540 MB physical memory used; 2.5 GB of 1.1 GB virtual memory used. Killing container.\nDump of the process-tree for container_1535979586125_0003_01_000001 :\n\t|- PID PPID PGRPID SESSID CMD_NAME USER_MODE_TIME(MILLIS) SYSTEM_TIME(MILLIS) VMEM_USAGE(BYTES) RSSMEM_USAGE(PAGES) FULL_CMD_LINE\n\t|- 108833 108769 108769 108769 (java) 39 15 2524606464 5947 /root/jdk1.8.0_181/bin/java -Xmx461m -Dlog.file=/root/yarn_study/hadoop-2.2.0/logs/userlogs/application_1535979586125_0003/container_1535979586125_0003_01_000001/jobmanager.log -Dlog4j.configuration=file:log4j.properties org.apache.flink.yarn.YarnApplicationMasterRunner \n\t|- 108769 105708 108769 108769 (bash) 1 1 115900416 351 /bin/bash -c /root/jdk1.8.0_181/bin/java -Xmx461m -Dlog.file=/root/yarn_study/hadoop-2.2.0/logs/userlogs/application_1535979586125_0003/container_1535979586125_0003_01_000001/jobmanager.log -Dlog4j.configuration=file:log4j.properties org.apache.flink.yarn.YarnApplicationMasterRunner 1> /root/yarn_study/hadoop-2.2.0/logs/userlogs/application_1535979586125_0003/container_1535979586125_0003_01_000001/jobmanager.out 2> /root/yarn_study/hadoop-2.2.0/logs/userlogs/application_1535979586125_0003/container_1535979586125_0003_01_000001/jobmanager.err \n\n" exit_status: -10002018-09-04 01:58:48,027 INFO org.apache.hadoop.yarn.server.nodemanager.NodeStatusUpdaterImpl: Sending out status for container: container_id { app_attempt_id { application_id { id: 3 cluster_timestamp: 1535979586125 } attemptId: 1 } id: 1 } state: C_RUNNING diagnostics: "Container [pid=108769,containerID=container_1535979586125_0003_01_000001] is running beyond virtual memory limits. Current usage: 24.6 MB of 540 MB physical memory used; 2.5 GB of 1.1 GB virtual memory used. Killing container.\nDump of the process-tree for container_1535979586125_0003_01_000001 :\n\t|- PID PPID PGRPID SESSID CMD_NAME USER_MODE_TIME(MILLIS) SYSTEM_TIME(MILLIS) VMEM_USAGE(BYTES) RSSMEM_USAGE(PAGES) FULL_CMD_LINE\n\t|- 108833 108769 108769 108769 (java) 39 15 2524606464 5947 /root/jdk1.8.0_181/bin/java -Xmx461m -Dlog.file=/root/yarn_study/hadoop-2.2.0/logs/userlogs/application_1535979586125_0003/container_1535979586125_0003_01_000001/jobmanager.log -Dlog4j.configuration=file:log4j.properties org.apache.flink.yarn.YarnApplicationMasterRunner \n\t|- 108769 105708 108769 108769 (bash) 1 1 115900416 351 /bin/bash -c /root/jdk1.8.0_181/bin/java -Xmx461m -Dlog.file=/root/yarn_study/hadoop-2.2.0/logs/userlogs/application_1535979586125_0003/container_1535979586125_0003_01_000001/jobmanager.log -Dlog4j.configuration=file:log4j.properties org.apache.flink.yarn.YarnApplicationMasterRunner 1> /root/yarn_study/hadoop-2.2.0/logs/userlogs/application_1535979586125_0003/container_1535979586125_0003_01_000001/jobmanager.out 2> /root/yarn_study/hadoop-2.2.0/logs/userlogs/application_1535979586125_0003/container_1535979586125_0003_01_000001/jobmanager.err \n\n" exit_status: -10002018-09-04 01:58:49,383 INFO org.apache.hadoop.yarn.server.nodemanager.NodeStatusUpdaterImpl: Sending out status for container: container_id { app_attempt_id { application_id { id: 3 cluster_timestamp: 1535979586125 } attemptId: 1 } id: 1 } state: C_RUNNING diagnostics: "Container [pid=108769,containerID=container_1535979586125_0003_01_000001] is running beyond virtual memory limits. Current usage: 24.6 MB of 540 MB physical memory used; 2.5 GB of 1.1 GB virtual memory used. Killing container.\nDump of the process-tree for container_1535979586125_0003_01_000001 :\n\t|- PID PPID PGRPID SESSID CMD_NAME USER_MODE_TIME(MILLIS) SYSTEM_TIME(MILLIS) VMEM_USAGE(BYTES) RSSMEM_USAGE(PAGES) FULL_CMD_LINE\n\t|- 108833 108769 108769 108769 (java) 39 15 2524606464 5947 /root/jdk1.8.0_181/bin/java -Xmx461m -Dlog.file=/root/yarn_study/hadoop-2.2.0/logs/userlogs/application_1535979586125_0003/container_1535979586125_0003_01_000001/jobmanager.log -Dlog4j.configuration=file:log4j.properties org.apache.flink.yarn.YarnApplicationMasterRunner \n\t|- 108769 105708 108769 108769 (bash) 1 1 115900416 351 /bin/bash -c /root/jdk1.8.0_181/bin/java -Xmx461m -Dlog.file=/root/yarn_study/hadoop-2.2.0/logs/userlogs/application_1535979586125_0003/container_1535979586125_0003_01_000001/jobmanager.log -Dlog4j.configuration=file:log4j.properties org.apache.flink.yarn.YarnApplicationMasterRunner 1> /root/yarn_study/hadoop-2.2.0/logs/userlogs/application_1535979586125_0003/container_1535979586125_0003_01_000001/jobmanager.out 2> /root/yarn_study/hadoop-2.2.0/logs/userlogs/application_1535979586125_0003/container_1535979586125_0003_01_000001/jobmanager.err \n\n" exit_status: -10002018-09-04 01:58:49,579 WARN org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor: Exit code from container container_1535979586125_0003_01_000001 is : 1432018-09-04 01:58:49,855 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.Container: Container container_1535979586125_0003_01_000001 transitioned from KILLING to CONTAINER_CLEANEDUP_AFTER_KILL2018-09-04 01:58:49,857 INFO org.apache.hadoop.yarn.server.nodemanager.NMAuditLogger: USER=ww OPERATION=Container Finished - Killed TARGET=ContainerImpl RESULT=SUCCESS APPID=application_1535979586125_0003 CONTAINERID=container_1535979586125_0003_01_0000012018-09-04 01:58:49,857 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.container.Container: Container container_1535979586125_0003_01_000001 transitioned from CONTAINER_CLEANEDUP_AFTER_KILL to DONE2018-09-04 01:58:49,857 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.application.Application: Removing container_1535979586125_0003_01_000001 from application application_1535979586125_00032018-09-04 01:58:49,857 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.logaggregation.AppLogAggregatorImpl: Considering container container_1535979586125_0003_01_000001 for log-aggregation2018-09-04 01:58:49,857 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.AuxServices: Got event CONTAINER_STOP for appId application_1535979586125_00032018-09-04 01:58:49,857 INFO org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor: Deleting absolute path : /tmp/hadoop-root/nm-local-dir/usercache/ww/appcache/application_1535979586125_0003/container_1535979586125_0003_01_0000012018-09-04 01:58:50,008 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl: Stopping resource-monitoring for container_1535979586125_0003_01_0000012018-09-04 01:58:50,397 INFO org.apache.hadoop.yarn.server.nodemanager.NodeStatusUpdaterImpl: Sending out status for container: container_id { app_attempt_id { application_id { id: 3 cluster_timestamp: 1535979586125 } attemptId: 1 } id: 1 } state: C_COMPLETE diagnostics: "Container [pid=108769,containerID=container_1535979586125_0003_01_000001] is running beyond virtual memory limits. Current usage: 24.6 MB of 540 MB physical memory used; 2.5 GB of 1.1 GB virtual memory used. Killing container.\nDump of the process-tree for container_1535979586125_0003_01_000001 :\n\t|- PID PPID PGRPID SESSID CMD_NAME USER_MODE_TIME(MILLIS) SYSTEM_TIME(MILLIS) VMEM_USAGE(BYTES) RSSMEM_USAGE(PAGES) FULL_CMD_LINE\n\t|- 108833 108769 108769 108769 (java) 39 15 2524606464 5947 /root/jdk1.8.0_181/bin/java -Xmx461m -Dlog.file=/root/yarn_study/hadoop-2.2.0/logs/userlogs/application_1535979586125_0003/container_1535979586125_0003_01_000001/jobmanager.log -Dlog4j.configuration=file:log4j.properties org.apache.flink.yarn.YarnApplicationMasterRunner \n\t|- 108769 105708 108769 108769 (bash) 1 1 115900416 351 /bin/bash -c /root/jdk1.8.0_181/bin/java -Xmx461m -Dlog.file=/root/yarn_study/hadoop-2.2.0/logs/userlogs/application_1535979586125_0003/container_1535979586125_0003_01_000001/jobmanager.log -Dlog4j.configuration=file:log4j.properties org.apache.flink.yarn.YarnApplicationMasterRunner 1> /root/yarn_study/hadoop-2.2.0/logs/userlogs/application_1535979586125_0003/container_1535979586125_0003_01_000001/jobmanager.out 2> /root/yarn_study/hadoop-2.2.0/logs/userlogs/application_1535979586125_0003/container_1535979586125_0003_01_000001/jobmanager.err \n\nContainer killed on request. Exit code is 143\n" exit_status: 1432018-09-04 01:58:50,397 INFO org.apache.hadoop.yarn.server.nodemanager.NodeStatusUpdaterImpl: Removed completed container container_1535979586125_0003_01_0000012018-09-04 01:58:51,416 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.application.Application: Application application_1535979586125_0003 transitioned from RUNNING to APPLICATION_RESOURCES_CLEANINGUP2018-09-04 01:58:51,422 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.AuxServices: Got event APPLICATION_STOP for appId application_1535979586125_00032018-09-04 01:58:51,422 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.application.Application: Application application_1535979586125_0003 transitioned from APPLICATION_RESOURCES_CLEANINGUP to FINISHED2018-09-04 01:58:51,422 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.logaggregation.AppLogAggregatorImpl: Application just finished : application_1535979586125_00032018-09-04 01:58:51,422 INFO org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor: Deleting absolute path : /tmp/hadoop-root/nm-local-dir/usercache/ww/appcache/application_1535979586125_00032018-09-04 01:58:51,434 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.logaggregation.AppLogAggregatorImpl: Starting aggregate log-file for app application_1535979586125_0003 at /tmp/logs/ww/logs/application_1535979586125_0003/machine1_42743.tmp2018-09-04 01:58:51,512 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.logaggregation.AppLogAggregatorImpl: Uploading logs for container container_1535979586125_0003_01_000001. Current good log dirs are /root/yarn_study/hadoop-2.2.0/logs/userlogs2018-09-04 01:58:51,513 INFO org.apache.hadoop.yarn.server.nodemanager.containermanager.logaggregation.AppLogAggregatorImpl: Finished aggregate log-file for app application_1535979586125_00032018-09-04 01:58:51,514 INFO org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor: Deleting path : /root/yarn_study/hadoop-2.2.0/logs/userlogs/application_1535979586125_0003 啥都不说,先直接看源码,看看问题在哪

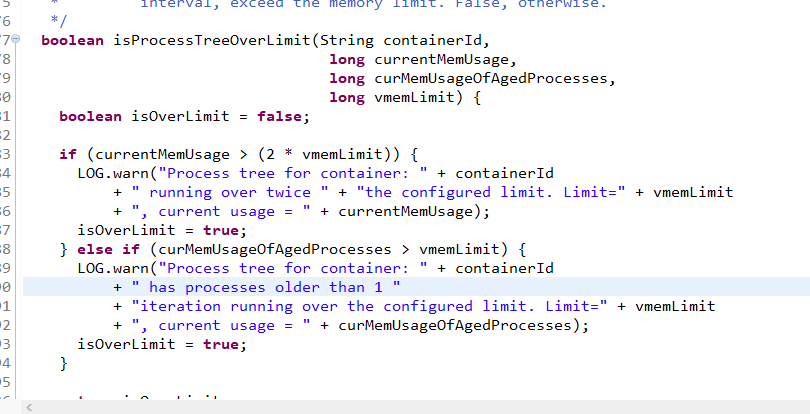

找到最开始报错的代码位置

org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl

找到源码位置

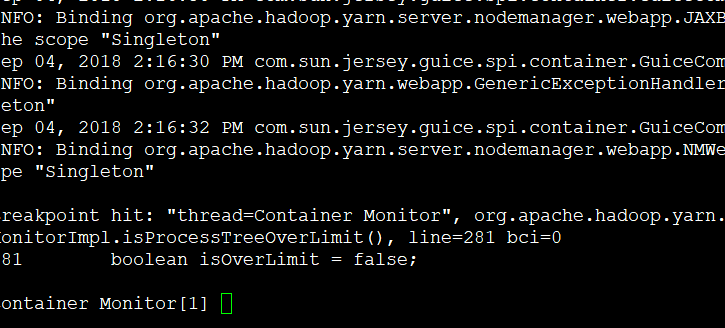

这样还不太清楚,我们直接设置断点来debug好了!

stop at org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl:281

成功命中!

我们来分析!

下面是debug的结果281 boolean isOverLimit = false;Container Monitor[1] next> Step completed: "thread=Container Monitor", org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl.isProcessTreeOverLimit(), line=283 bci=3283 if (currentMemUsage > (2 * vmemLimit)) {Container Monitor[1] print currentMemUsage currentMemUsage = 2647941120Container Monitor[1] print vmemLimit vmemLimit = 1189085184Container Monitor[1] next> Step completed: "thread=Container Monitor", org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl.isProcessTreeOverLimit(), line=284 bci=14284 LOG.warn("Process tree for container: " + containerId 看看调用栈

Container Monitor[1] where [1] org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl.isProcessTreeOverLimit (ContainersMonitorImpl.java:284) [2] org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl$MonitoringThread.run (ContainersMonitorImpl.java:406)

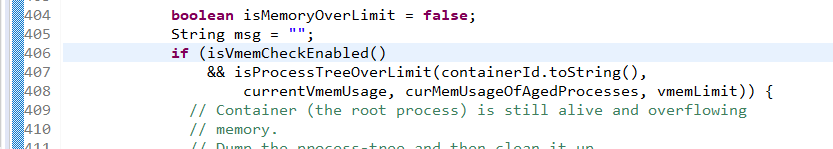

其实触发是因为

我们看后面3个参数的值是怎么来的!

这就需要在

stop in org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl$MonitoringThread.run

设置断点,继续观察

根本原因在

vmemCheckEnabled = conf.getBoolean(YarnConfiguration.NM_VMEM_CHECK_ENABLED,

YarnConfiguration.DEFAULT_NM_VMEM_CHECK_ENABLED);时间有限,先关闭这个检查

yarn.nodemanager.vmem-check-enabled false